Technologies that truly revolutionize things and impact how we live come along only occasionally. Universal Serial Bus (USB) Type-C is one of those technologies. USB Type-C gives users more capability (data, video and power) than any other connector, along with great convenience, including flip capability, and small size. Over the next two years, most of our electronics will likely move to adopt it.

This leap in technology will come with several new terms that you will start to hear. While they may initially sound a little awkward, after you use them for a bit, they’ll become second nature.

Familiar terms with USB Type-C application

Some terms remain the same. D+ (D-Plus or DP) and D- (D-Minus or DM) have not changed. In fact, they have become more prevalent because the connector requires these signals to be present, unlike on some previous connectors. VBus and GND have also not gone anywhere.

Other terms are old but have assumed new labels. In 2001, the Universal Serial Bus Implementers Forum released USB On-The-Go (OTG) to allow a new class of products that could act as a host or device. For example, when I connect my mobile phone to a laptop, I want it to act as a device. However, when I connect my phone to a printer, it should act as a host. OTG was never universally adopted based on the complexity of connectors. It became confusing when using connectors because additional connector pins were required. The new USB Type-C standard addresses this from the start with its 24-pin connector.

New roles and ports in USB Type-C

A new term making its debut along with USB Type-C is downstream-facing port (DFP). DFP refers to the port that we would commonly associate with the host. When you add the flexibility of power, the host is now able to negotiate data flow. In the default stage, the DFP sources VBus and the VCONN. Adding a Power Delivery (PD) controller allows for adaptation to fit the system’s needs. For example, users could have their phone act as a host, but still have power provided to it. A PD controller helps achieve this.

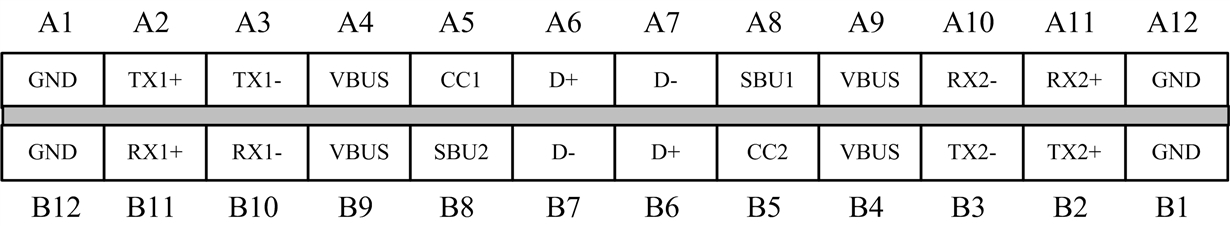

The converse is an upstream-facing port (UFP), which we would commonly associate with the device. In the default stage, the UFP sinks VBus and supports data. If the port is capable of doing either or both, then it is called a Dual-Role Port (DRP). A DRP can change dynamically. Enabling such versatile roles on a legacy connector (such as USB 2.0 or USB Standard A) requires complex controllers and software on the host and end device. However, the new 24-pin connector uses the configuration channel (CC) pin to comprehend which rows to identify between each adjoining side. TI’s TUSB320 family handles all of the USB Type-C channel controller and mode configuration for USB 2.0 and is available today.

USB Type-C is also future-proofed for higher data speeds, from the electrical capability of the connector and wiring to the standard that comprehends active cables. For active cables, there is a defined specification for VCONN – a 5V 200mA supply – that can power a signal conditioner in the cable for redriving or retiming.

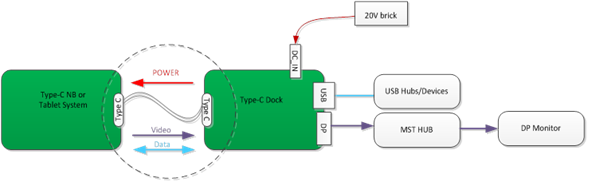

The biggest area of advancement in the USB standard is Alternate Mode, which allows you to put a guest protocol on the cable. Alternate Mode allows for a guest protocol on the USB cable. This allows the users to exchange USB data, power and another protocol, which typically is video. There are several protocols from which to choose. The most widely known are DisplayPort (announced last September), Mobile High Link (MHL) (announced last November), Thunderbolt and PCI-Express. TI’s HD3SS460 USB Type-C cross-point switch supports Alternate Mode and is also available today.

New vocabulary just the beginning of the new standard’s impact

The adoption of USB Type-C will revolutionize how consumers interact with their devices, and it’s beginning to reshape our vocabulary surrounding its functions and implementation. Where do you expect to see USB Type-C and USB Power Delivery implemented over the next year? Log in to post a comment or question about the unique language of this new one-cable standard.

Additional resources

- Read other blog posts about USB Type-C.

- Learn about TI’s USB portfolio.

- View the datasheet for the TUSB320 device.

- View the datasheet for the HD3SS460 device.